ROBOTS TXT FILE EXPLAINED

WHAT IS A ROBOTS.TXT FILE

• The Robots TXT File is a text file sitting in your website’s root folder, which controls bot access to your site.

• The Robots TXT File informs Google Bots of which pages to crawl (and not to crawl) on a website.

• Google Bots, known as Spiders, search the Internet looking to crawl and index new webpages.

• The Robots TXT file instructs Spiders to crawl certain pages & ignore other pages to save crawl budget.

• Crawl budget is the limited amount of crawl that spiders will use on your website.

• Exceeding your crawl budget may leave valuable pages to be missed and not listed on Google.

REASONS TO USE ROBOT TXT FILE

IMPROVES YOUR SEO

Instructing Google Bots which pages to target is ideal for SEO, as it prioritizes those primary pages.

The Robots TXT File ensures valuable pages of your website are found and listed on Google.

It assures your website has the potential to land on Page 1 of Google for valuable keywords.

AVOIDS DUPLICATE CONTENT ISSUES

Websites with two webpages containing the same information can split your SEO power and confuse Google.

It is known as Duplicate Content, and it can prevent your website from reaching the Top Spots on Google.

The Robots TXT file tells Google which page (out of 2) you want to index and rank on Google.

WEBSITE CONSTRUCTION

Websites undergoing development are not ready to be listed on Google.

The Robots TXT File prevents access to these pages as your website is not presentable yet.

Do not forget to change the rules of your robots.txt file when you are ready to go live, or your website won’t be indexed.

FREQUENTLY ASKED QUESTIONS

Where is the robots.txt file located?

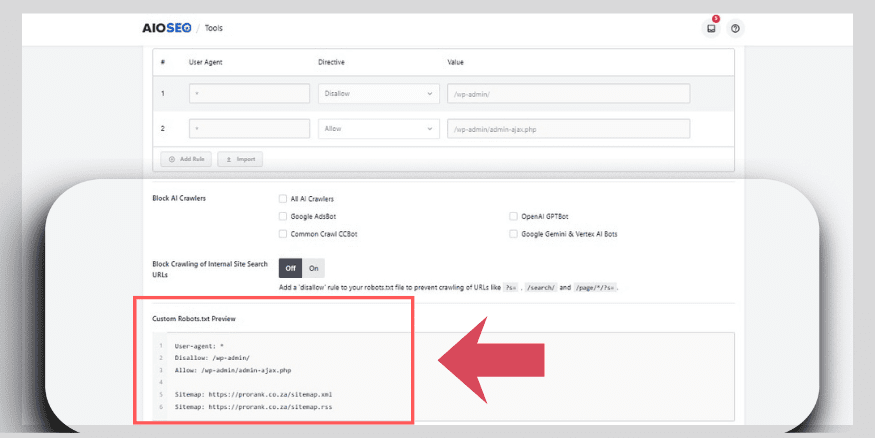

It’s placed in the root directory of your website, www.yoursite.com/robots.txt.

Can I block my entire website with robots.txt?

Yes. Add User-agent: * and Disallow: /, but be careful as it hides all pages from search engines. You do this when contructing the website.

Does robots.txt prevent pages from being indexed?

Not exactly. It stops crawling, but pages can still appear in search results if linked on another website.

Can robots.txt speed up crawling?

Yes! It is important to block unnecessary pages, you help search engines focus on important content.

Can robots.txt affect SEO?

Yes! Blocking important pages can hurt SEO, while properly configured robots.txt improves crawl efficiency (which is good for SEO).

How do I allow search engines to crawl a page?

Use Allow: /page-url or simply don’t block it in the robots.txt file.

Can I test my robots.txt file?

Yes! Sign up for Google Search Console and go to the robots.txt Tester to check for errors.